PRIVILEGE IS INVISIBLE TO THOSE WHO HAVE IT

About

Welcome to our project on discrimination awareness, an initiative installation created by five passionate students from the University of Applied Sciences Munich, muc.dai.

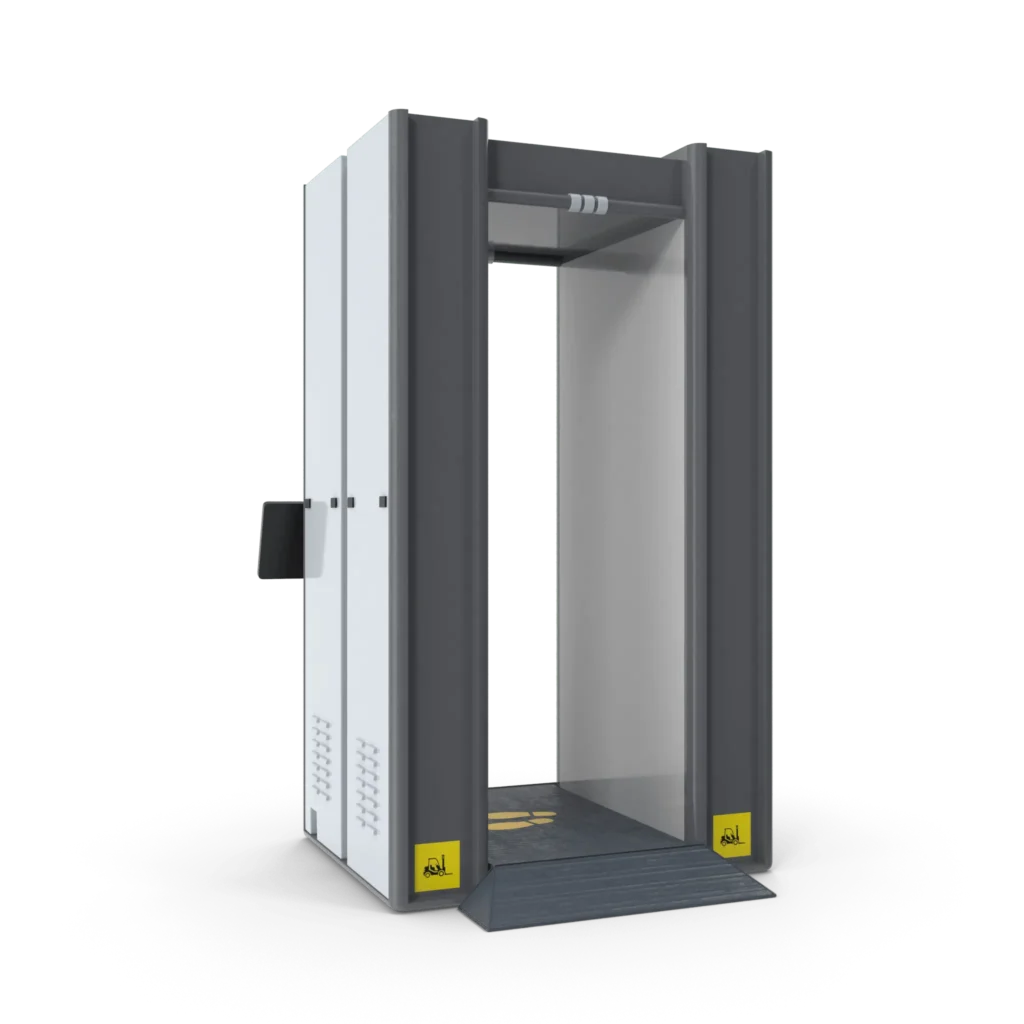

Our project focuses on raising awareness about discrimination through an innovative and interactive installation. By leveraging advanced AI technologies, we built a face scanner that analyzes gender and race, highlighting the pervasive issue of bias in modern technology. This interactive experience aims to provoke thought and empathy, encouraging participants to reflect on the realities of discrimination in our society.

The installation is designed with privacy in mind. All data is processed locally using our custom machine learning model, ensuring that no personal information is stored or shared externally.

For more insights into our process and the technology behind the installation, please browse through our website.

Thank you for your interest and support.

MAIN REASONS OF DISCRIMINATION

Discrimination continues to undermine the principles of equality and human rights. By shedding light on these issues, we aim to foster awareness and promote a more inclusive and equitable world.

Race

Racial discrimination undermines equality and fuels social injustice.

Age

Age discrimination limits opportunities and disregards the value of experience.

Gender

Gender discrimination perpetuates inequality and hinders societal progress.

Sexual Orientation

Discrimination based on sexual orientation violates human rights and dignity.

Understanding Al Discrimination

Al discrimination happens when computer programs make unfair decisions that hurt certain groups of people. This can happen because the data used to teach the Al has biases or because the Al was not designed carefully. For example, face recognition technology might not recognize people with darker skin as well as those with lighter skin, causing problems like mistaken identity. In job hiring, an Al might favor certain types of candidates because it learned from biased past data. To fix this, we need to use fair data, test the Al thoroughly, and keep checking it to make sure it treats everyone equally.

- Discrimination in recruiting

- Discrimination in criminal prosecution

- Discrimination in e.g. credit score

Our Goal

The topic of discrimination is a highly sensitive one, considering everyone’s definition is different. Our goal is to evoke empathy in people who have not had the experience of getting put down for something out of their control.

The most obvious group of them being ‚white men‘. Our algorithm detects them by the color of their skin and features and denies the imaginary entry. Red lights and voice confirming it, highlights the rejection.

We do not want people to feel embarrassed but make them feel empathy. Discrimination is very much real.

MEET THE TEAM

We are five students, studying ‚Computer Science & Design‘ at University of applied sciences Munich.

Tarek Shuieb

Suhal Enayat

Sebastian Wiest

Una Beganovic

Laura Preißer

WORK PROCESS

What started out as a simple project for university, turned into a fundamental experience in teamwork, self-improvement and social injustice.

Brainstorming

Getting assigned the topic of 'Discrimination' and having to work with AI, we had many ideas. Our team members talked about their experience on discrimination and one member's story stuck with us. Getting racially profiled during airport security checks.

Planning

Everyone in our team has their own strenghts, so we split into working groups. We talked about material, possible algorithms and how we want our exhibit to look like. We settled on a security terminal.

Final Product

After a lot of trial and error we had our project finalized. More than 500 Euros went into the project, despite only getting funding for 100 Euros. We are satisfied with how everything turned out.